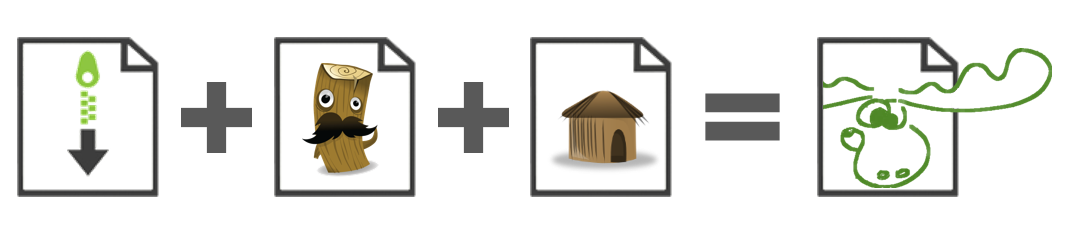

Taking a closer look on neccessary log file analysis I stumbled upon the ELK - a stack using Elasticsearch + Logstash + Kibana

So lets start with an example setup of these tools. Logstash comes with some kind of build in Kibana, but I used the seperate one.

Normally I'm the one who gets stuck with some kind of simple-to-solve-on-linux-system problems. But I got ELK up and running - so you'll do as well!

Elasticsearch acts as indexing data sink

Logstash takes and transforms logs and put them to Easticsearch

Kibana acts as GUI to nicely display results from Elasticsearch

I used Ubuntu 14.04 LTS running as a VMWare or VirtualBox VM. You should have a server in place with network connectivity and SSH access to. That's my prerequisite - all others we're going to build up ourselves.

Take care of your servers disk space since we're dealing with log files. In my case around 14 GB of old logs (~80 mio log lines) to bring to ELK.

Take care of your servers disk space since we're dealing with log files. In my case around 14 GB of old logs (~80 mio log lines) to bring to ELK.

Install JDK and Apache

elk# apt-get update

elk# apt-get install openjdk-7-jdk

elk# apt-get install apache2

elk# apt-get install curl

elk# apt-get install curl

Well done. That's it for the server configuration. Not kidding!

LogstashDownload Logstash. I choose ZIP version, DEB and RPM are available as well.

elk# curl -O https://download.elasticsearch.org/logstash/logstash/logstash-1.4.1.tar.gz

elk# mv logstash-1.4.1.tar.gz /opt

elk# tar xvfz /opt/logstash-1.4.1.tar.gz

elk# mv logstash-1.4.1.tar.gz /opt

elk# tar xvfz /opt/logstash-1.4.1.tar.gz

Before starting Logstash we're going to prepare for log import. Assume a log file with the following structure (space delimited):

#api thread timestamp uid method execution_time result exn id

webapi 1462 2014-05-19T00:01:07.297 00000000-0000-0000-0000-000000000001 GetByName() 0 java.util.ArrayList 0 static

Paste this line of log to file /opt/logstash-1.4.1/20140520.log

Create a file /opt/logstash-1.4.1/import.conf with following content:

input {

file {

path => [ "/opt/logstash-1.4.1/*.log" ]

start_position => "beginning"

discover_interval => 1

}

}

filter {

grok {

patterns_dir => "/opt/logstash-1.4.1/patterns"

match => [ "message", "%{DATA:api}%{SPACE}%{NUMBER:thread}%{SPACE}%{TIMESTAMP_ISO8601:logdate}%{SPACE}%{UUID:usr}%{SPACE}%{DATA:method}%{SPACE}%{NUMBER:ms}%{SPACE}%{DATA:result}%{SPACE}%{DATA:exn}%{SPACE}%{DATA:id}" ]

}

date {

match => [ "logdate", "ISO8601" ]

}

}

output {

elasticsearch {

host => localhost

}

}

file {

path => [ "/opt/logstash-1.4.1/*.log" ]

start_position => "beginning"

discover_interval => 1

}

}

filter {

grok {

patterns_dir => "/opt/logstash-1.4.1/patterns"

match => [ "message", "%{DATA:api}%{SPACE}%{NUMBER:thread}%{SPACE}%{TIMESTAMP_ISO8601:logdate}%{SPACE}%{UUID:usr}%{SPACE}%{DATA:method}%{SPACE}%{NUMBER:ms}%{SPACE}%{DATA:result}%{SPACE}%{DATA:exn}%{SPACE}%{DATA:id}" ]

}

date {

match => [ "logdate", "ISO8601" ]

}

}

output {

elasticsearch {

host => localhost

}

}

Run Logstash using the new created config:

elk# /opt/logstash-1.4.1/bin/logstash -f /opt/logstash-1.4.1/import.conf &

Now Logstash starts importing all files with extension .log from /opt/logstash-1.4.1 directory. You'll get some processing infos to console since Logstash runs in current sessions background.

Elasticsearch

Download and run Elasticsearch.

Stop hyperventilate! I know about the issues sending processes to the background. But it's fine for a simpel and fast first impression to ELK. Sure, Logstash and Elasticsearch should be configured as services - but not now.

Kibana

elk# curl -O https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.1.1.tar.gz

elk# mv elasticsearch-1.1.1.tar.gz /opt

elk# tar xvfz /opt/elasticsearch-1.1.1.tar.gz

elk# /opt/elasticsearch-1.1.1/bin/elasticsearch &

elk# mv elasticsearch-1.1.1.tar.gz /opt

elk# tar xvfz /opt/elasticsearch-1.1.1.tar.gz

elk# /opt/elasticsearch-1.1.1/bin/elasticsearch &

Stop hyperventilate! I know about the issues sending processes to the background. But it's fine for a simpel and fast first impression to ELK. Sure, Logstash and Elasticsearch should be configured as services - but not now.

Assuming Apache web root location at /var/www we're moving Kibana to this location.

Download and extract Kibana.

elk# curl -O https://download.elasticsearch.org/kibana/kibana/kibana-3.1.0.tar.gz

elk# mv kibana-3.1.0.tar.gz /var/www

elk# tar xvfz /var/www/kibana-3.1.0.tar.gz && mv /var/www/kibana-3.1.0/* /var/www

elk# mv kibana-3.1.0.tar.gz /var/www

elk# tar xvfz /var/www/kibana-3.1.0.tar.gz && mv /var/www/kibana-3.1.0/* /var/www

Adopt /var/www/config.js to point to Elasticsearch API, which runs on same host. Don't use localhost - instead use IP address or full qualified domain name (FQDN) of your host. Find elasticsearch parameter in config.js - Example:

elasticsearch: "http://10.0.0.2:9200"

Where as 10.0.0.2 is the IP address of your Elasticsearch host.

Done with Kibana.

Are you happy?

No! Point your favourite web browser to http://10.0.0.2/. Now you're happy because Kibana shows up. Use the Logstash Dashboard link to watch your index growing.

Now it's our turn. Crawl Logstash docs for more sophisticated log processing which fits your needs. Start with the ELK stack in general at the Elasticsearch page.

Follow @APGlaeser