For enterprises it’s some kind of more complex. Distributed development teams with hundreds of developers have to be integrated by a centralized IT department. Supplying development environments as well as on demand testing infrastructure, code repositories, build and test pipelines, automatic infrastructure provisioning, deployment pipelines and several pre-production and production stages.Sounds huge? It is! But – how does DevOps starts? 3 steps to get into.

Talk to each other

Set up a weekly (that’s enough for starting with) jour fixe. Attendees are developers, IT staff and management people. Discuss business plans, how business will grow, which features to implement and which impacts this has for providing and running tools and infrastructure. Let the ops be part of your dev planning meetings (assumes some sort of agile development framework is already implemented for your dev teams) will be the next step. But be aware: There’s usually 1 ops for n dev teams or dev team members. So involving ops into detailed dev planning will end up with an ops meeting marathon where the ops will have no time left for doing their jobs. So some sort of abstraction is necessary.

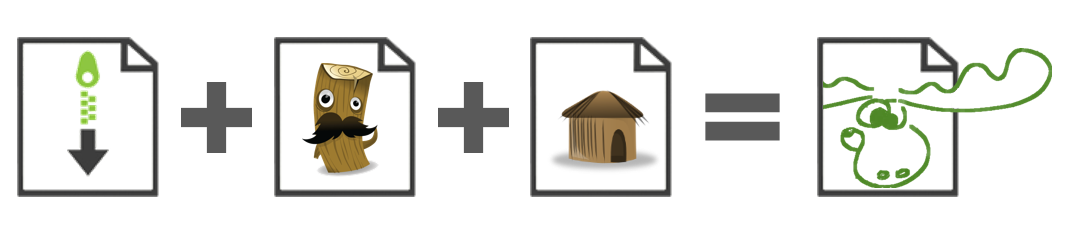

Use Checklists

Before automation is part of your daily business, use checklists to get your software or parts of it to production. I’m serious. Checklists ensure:

- Integrity of deployed software (use for example SHA256 for checksums of your packages)

- Antivirus scan – yes, it’s quite easy to inject some sort of malware during your build pipeline

- Detailed deployment steps – besides artefacts, which changes to apply e.g. to configuration files or database schema (and of course you’re using a database versioning tool like flyway :-)

- Approval – who finally approves your deployment? This could be a role like product management in conjunction with quality assurance (QA). No approval, no deployment!

- Inform all stakeholders on new release – keep an email template for announcements. Announce a new release before deployment and a after a successfully finished deployment.

- Ensure all required documents are ready – people would like to know what changed since version 2.0.0 and the new 2.1.0. So release notes are a good idea.

A simple example - deploying artefacts to several JBoss instances:Deploying a module, hotfix or any other package throughout several stages with in sum 15 servers will take you say 30mins for each cycle, which includes (per server):

- SSH or RDP login to server

- Distribute package to server

- Copy files to deployment directory

- Check logs for errors

- Copy package to single distribution server (yes, assumption is that you have some machine you can deal with which sits in the same network as your stages do)

- Run remote deployment using JBoss management interface

But that’s not out of the box. There’s a bunch of work to do:

- Configure (management interface) and secure (management user) JBoss servers for remote access

- Adjust firewall settings for remote access

- Maybe you have to set up your own management network with its own IP address range

- Write deployment scripts on distribution server and run them frequently (as cron jobs for example)

- Do heavy testing

- Maybe you will encounter running out of JVM PermGen on frequent deployments running Java 7

- Outcome of this is to upgrade to newer JVM version, which has a large impact to your dev and QA teams

- Create an output pipeline such as a website, email notification or monitoring integration to get feedback of your deployments – no problem, as long as everything is fine, but you should get notified in case of deployment errors

What’s next?

Besides deployment of artefacts next steps could be implementing configuration management tools such as Puppet or Chef to deal with configuration files on your stages. Like your centralized deployment server there will be a centralized configuration management master to keep your stages configuration up to date.But before setting up configuration management tools you should think about streamlining your configuration across your stages. Make use of configuration files for stage specific settings such as database connectors or IP addresses.Culture first!

As I wrote in one of my recent articles DevOps – was bisher geschah (German only), introducing DevOps to grown structures, it’s about 80% culture and 20% tools. So start with culture:

- Blameless Postmortems (1): Get rid of your dev and ops divided thinking. Start analyse incidents without blaming each other.

- Faults happen: Don't try to avoid faults and incidents but have a plan when they happen

- Assumption: People doing their best. People are not evil by default trying to break a system

- Kill features nobody uses. Sounds simple but explain to a manager to kill features which took invest and man power to develop. Have fun! But throwbacks are part of the game. No management support, no DevOps!

(1) Thanks to @roidrage of giving an overview on this on Continuous Lifecycle Conference 2014 in Mannheim, Germany